s2s-ai-challenge legacy

The s2s-ai-competition is officially over. However, the organizers encourages everyone to post new contributions on renku in the future and to take advantage of the software framework developed for the competition. This website and the renku projects will be kept available until December 2023 (the end of the S2S project).

Especially if your contribution beats the current leader, please contact Andy Robertson and Frederic Vitart.

The s2s-ai-challenge-scorer contributing to the leaderboard (now migrated) stops working, but you can still easily score your contributions manually (now migrated).

Competition Overview

- Goal: Improve subseasonal-to-seasonal precipitation and temperature forecasts with Machine Learning/Artificial Intelligence

- Flyer

- Competition runs on platform https://renkulab.io/

- How to join: https://renkulab.io/projects/aaron.spring/s2s-ai-challenge-template/ (now migrated)

- Contributions accepted: 1st June 2021 - 31st October 2021

- Data: via climetlab, see Data

- Website: https://s2s-ai-challenge.github.io

- Organized by: WMO/WWRP, WCRP, S2S Project in collaboration with SDSC and ECMWF

![]()

![]()

![]()

![]()

![]()

![]()

Table of Contents

- Announcements

- Description

- Timeline

- Prize

- Predictions

- Evaluation

- Data

- Training

- Discussions

- Leaderboard

- Rules

- Organizers

Announcements

2022-02-04: prize announcement

The expert peer review gave a pass on all five submissions. Based on the leaderboard the prizes go to:

🥇(CHF 15,000): David Landry, Jordan Gierschendorf, Arlan Dirkson, Bertrand Denis (Centre de recherche informatique de Montréal and ECCC)

🥈 (CHF 10,000): Llorenç Lledó, Sergi Bech, Lluís Palma, Andrea Manrique, Carlos Gomez (Barcelona Supercomputing Center)

🥉 (CHF 5,000): Shanglin Zhou and Adam Bienkowski (University of Connecticut)

The organizers thank Frederic Pinault (ECMWF), Jesper Dramsch (ECMWF), Stephan Rasp (climate.ai) and Kenneth Nowak (USBR) for their expert reviews.

2022-01-26: prize announcement on 4 Feb 2022

- The announcement of prize will take place Friday February 4th 2022 14-15 UTC.

- Opening remarks:

- Jürg Luterbacher, Director of Science and Innovation Department, WMO

- Florian Pappenberger, Director of Forecast Department, ECMWF

- Roskar Rok, Head of Engineering, Swiss Data Science Center

- Two keynote lectures:

- recording

2024-01-04: link for conference session: 26 Jan 2022

- The conference-style session with 10 min presentation and 8 min Q&A will be held on 26 Jan 2022 in the S2S webinar. Re-review the recording.

2021-11-23: date for conference session: 26 Jan 2022

- The conference-style session with 10min presentation and 10min Q&A will be held on 26 Jan 2022 in the S2S webinar

32pm UTC. - Written expert review comments will be posted two weeks before.

- The expert peer review will be pass/fail.

- Submitting teams have time until 5 December 2021 to improve the documentation of their method and code.

2021-11-04: Announcement of the score RPSS and beginning of open review

- Please see the leaderboard for the score RPSS.

- The open review is officially started. For questions, please go to the corresponding submissions repository via the leaderboard and open an issue. Please conform to the open review guidelines.

- We decided to give the five submissions with positive RPSS into expert peer review.

2021-10-22: Small modifications to s2saichallengescorer

- After last minute community feedback, we decided to modify the way we average the RPS for RPSS. Following Weigel et al. 2007 #50, !23 implements

RPSS=1-<RPS_ML>/<RPS_clim>, whereas before we hadRPSS=<1-RPS_ML/RPS_clim>, where angle brackets<>denote the average of the scores over a given number of forecast–observation pairs. Furthermore, we now penalizeNaNswhere numerical values where expected byRPS=2. - We restart the

s2saichallengescorer, which automatically fetches the new scores of previous submissions, so your old submissions are re-evaluated. - Implementing this yields an RPSS benchmark from the ECMWF model of -0.00158017.

2021-09-30: Last month for submissions

- The submission deadline for this competition is approaching in one month. The exact timestamp for

git tags to be considered is Sunday 31st October 2021 23:59h GMT. As this date is halloween and one a weekend, there is a 48h grace period until Tuesday 2nd November 2021 23:59h GMT to fix submissions with technical assistence via gitter. Please make your repositories public between Wednesday 3rd and Friday 5th November 2021. - We recognize that it is very challenging to beat climatology on a global scale for these subseasonal forecast, i.e. getting positive scores for both variables and both lead times averaged. We encourage everyone to submit their results, even with negative scores.

- If you want to confirm that the online

s2saichallengescoreryields the same numerical score as the score calculated byskill_by_year, please ask Aaron in a private chat via gitter to confirm your score. Please ensure that you followed all these steps before.

2021-07-27:

- After community feedback, we changed the ground truth observations files: Observations are now conservatively regridded, missing data at

longitude=0and after leap days has been added. Please update these files into your training pipeline if you used them before: - Therefore, we release

s2s-ai-challenge-templatev0.4. For an overview of the changes, please see CHANGELOG. Thes2saichallengescorernow uses these updated observations.

2021-06-29:

- More details about the review process added, see leaderboard.

- Small change to the rules:

- “The decision about the review grade is final and there will be no correspondence about the review.”

- The organizers freeze the rules.

2021-06-19:

- Small change to the rules after feedback from the town halls:

- One team can only get one prize.

One Person can only join one team.NEW: Teams with with overlapping members must use considerably different methods to be considered for prizes both. One person can only join three teams at maximum. - Prizes are only issued if the method beats the re-calibrated ECMWF benchmark AND CLIMATOLOGY.

- One team can only get one prize.

- Answers to many questions asked in the town hall meetings can be found in the FAQ

climetlab_s2s_ai_challenge.extra.forecast_like_observationsconverts obserations withtimedimension to the same dimensions as initialized forecasts with dimensionforecast_timeandlead_time. This helper function can be used fortraining/hindcast-inputwithmodel='ncep'and any other initialized forecasts (e.g. from SubX or S2S, seeIRIDL.ipynbexample)from climetlab_s2s_ai_challenge.extra import forecast_like_observations import climetlab as cml import climetlab_s2s_ai_challenge print(climetlab_s2s_ai_challenge.__version__, cml.__version__) # must be >= 0.7.1, 0.8.0 forecast = cml.load_dataset('s2s-ai-challenge-training-input', date=20100107, origin='ncep', parameter='tp', format='netcdf').to_xarray() obs_lead_time_forecast_time = cml.load_dataset('s2s-ai-challenge-observations', parameter=['pr', 't2m']).to_xarray(like=forecast) # equivalent obs_ds = cml.load_dataset('s2s-ai-challenge-observations', parameter=['pr', 't2m']).to_xarray() obs_lead_time_forecast_time = forecast_like_observations(forecast, obs_ds) obs_lead_time_forecast_time <xarray.Dataset> Dimensions: (forecast_time: 12, latitude: 121, lead_time: 44, longitude: 240) Coordinates: valid_time (forecast_time, lead_time) datetime64[ns] 1999-01-08 ... 2... * latitude (latitude) float64 90.0 88.5 87.0 85.5 ... -87.0 -88.5 -90.0 * longitude (longitude) float64 0.0 1.5 3.0 4.5 ... 355.5 357.0 358.5 * forecast_time (forecast_time) datetime64[ns] 1999-01-07 ... 2010-01-07 * lead_time (lead_time) timedelta64[ns] 1 days 2 days ... 43 days 44 days Data variables: t2m (forecast_time, lead_time, latitude, longitude) float32 ... tp (forecast_time, lead_time, latitude, longitude) float32 na... Attributes: script: climetlab_s2s_ai_challenge.extra.forecast_like_observations- Town hall recordings for June 2nd and June 10th and slides are available

- Applicants for EWC compute resources have be contacted June 16th with a concrete proposal of computational resources and asked to provide a reason why they could not participate without these resources if they have not answered the question before. ECMWF can provide 20 machines, please use the resources responsibly and notify us if you do not need them anymore. You can still ask Aaron if some EWC compute instance if currently unused.

- Rok from SDSC offers to deliver a

renkuworkshop, please indicate your interest here s2saichallengescorerclips allRPSSgrid cells to interval [-10, 1]. WhereNaNprovided but number expected, we penalize by-10. !2 !9- Updated template file for submissions in

s2s-ai-challenge-templateand submissions, with coordinate descriptions in thehtmlrepr. - Refine description how biweekly aggregates are computed, see

aggregate_biweeklyand the attributes in the submissions template file. Recomputed biweekly observations, which will be used bys2saichallengescorers2s-ai-challenge#22. - Added

IRIDL.ipynbwith server-side preprocessing forS2SandSubXmodels as well as setting your IRIDL cookie to access restrictedS2Soutput. - Please remember to

git add current_notebook.ipynb && git commit -m 'message'beforegit tagto ensure that the uptodate notebook version is also tagged. Please also consider linting your code. - For changes to

s2s-ai-challenge-template, see CHANGELOG.md and best use releasev0.3.1. - Participants are encouraged to submit their 2020 forecasts (instructions) well before the end of the competition to ensure that their submissions are successfully scored by the

s2saichallengescorer. Check whether your submission committed in the last 24h was successfully checked bys2saichallengescorerhere, where no numerical scores are shown, onlycompletedorfailed. Note that we provide the scoring script asskill_by_yearand ground truth data for responsible use only.

2021-05-31: Template repository and scorer bot ready

- We will start accepting submissions from tomorrow June 1st to October 31st 2021.

- The template on renkulab.io is ready to use. You can also work on such a

gitrepository locally. Please play around, raise questions or report bugs in the competition issue tracker. - Follow these steps to join the competition.

2021-05-27: Town hall dates and EWC compute deadline changes

The organizers slightly adapted the rules:

- The numerical RPSS scores of the training period must be made available in the training notebook, see example.

- The safeguards for reproducibility in the training and prediction template have been adapted:

- Code to reproduce training and predictions are prefered to run within a day on the described architecture. If the training takes longer than a day, please justify why this is needed. Please do not submit training piplelines, which several take weeks to train.

Code to reproduce runs within a day

- Code to reproduce training and predictions are prefered to run within a day on the described architecture. If the training takes longer than a day, please justify why this is needed. Please do not submit training piplelines, which several take weeks to train.

The organizers invite everyone to join two town hall meetings:

The meetings will include a 15-minutes presentation on the competition rules and technical aspects, followed by a 45-minutes discussion for Q&A.

A first version of the s2s-ai-challenge-template repository is released. Please fork again or rebase.

The deadline to apply for EWC compute access is shifted to 15th June 2021. Please use the competition registration form to explain why you need compute resources. Please note that ECMWF just provides access to EWC, but not detailed support of how to setup your environments etc.

The RPSS formula has change to incorporate the RPSS with respect to climatology, see evaluation.

2021-05-10: Rules adapted to discourage overfitting

The organizers modified the rules:

- The codes used must be fully documented, with details of the safeguards enacted to prevent overfitting, see checked safeguards in template.

- Code to reproduce submissions must be made available between November 1st and November 5th 2021 to enable open peer-review.

- The RPSS leaderboard will be hidden until early November 2021 once all participants made their code public.

- The final leaderboard will be ranked based on the numerical RPSS and a grade from expert peer-review for the top submissions.

- To have more time for this review, we shift the announcement of prizes into February 2022.

- Methods to create the 2020 forecasts must perform similar on new, unseen data. Therefore do not overfit.

- The organizers reserve the right to disqualify submissions if overfitting is suspected.

- To be considered for computational resources at EWC, you need to register until July 1st 2021 and explain why you cannot train your ML model elsewhere.

The organizers are aware that overfitting is an issue if the ground truth is accessible. A more robust verification would require predicting future states with weekly submissions over a year, which would take much more time until one year of new observations is available. Therefore, we decided against a real-time competition to shorten the project length and keep momentum high. Over time we will all see which methods genuinely have skill and which overfitted their available data.

Description

The World Meteorological Organization (WMO) is launching an open prize challenge to improve current forecasts of precipitation and temperature from today’s best computational fluid dynamical models 3 to 6 weeks into the future using Artificial Intelligence and/or Machine Learning techniques. The challenge is part of the the Subseasonal-to-Seasonal Prediction Project (S2S Project), coordinated by the World Weather Research Programme (WWRP)/World Climate Research Programme (WCRP), in collaboration with Swiss Data Science Center (SDSC) and European Centre for Medium-Range Weather Forecasts (ECMWF).

Improved sub-seasonal to seasonal (S2S) forecast skill would benefit multiple user sectors immensely, including water, energy, health, agriculture and disaster risk reduction. The creation of an extensive database of S2S model forecasts has provided a new opportunity to apply the latest developments in machine learning to improve S2S prediction of temperature and total precipitation forecasts up to 6 weeks ahead, with focus on biweekly averaged conditions around the globe.

The competition will be implemented on the platform of Renkulab at the Swiss Data Science Center (SDSC), which hosts all the codes and scripts. The training and verification data will be easily accessible from the European Weather Cloud and relevant access scripts will be provided to the participants. All the codes and forecasts of the challenge will be made open access after the end of the competition.

This is the landing page of the competition presenting static information about the competition. For code examples and how to contribute, please visit the contribution template repository renkulab.io.

Timeline

- 4th May 2021: Announcement of the competition

- 1st June 2021: Start of the competition (First date for submissions)

- Town hall meetings for Q&A:

- 1st July 2021: Freeze the rules of the competition

- 31st October 2021: End of the competition (Final date for submissions)

- 1st November 2021: Participants make their code public

- 10th November 2021: Organizers make RPSS leaderboard public

- 1st November 2021 - January 2022: open peer review and experts peer review the top submissions

- February 2022: Release final leaderboard (based on RPSS and review grades); Announcement of the prizes

Prize

Prizes will be awarded to for the top three submissions evaluated by RPSS and peer-review scores and must beat the calibrated ECMWF benchmark and climatology. The ECMWF recalibration has been performed by using the tercile boundaries from the model climatology rather than from observations:

- Winning team: 15000 CHF

- 2nd team: 10000 CHF

- 3rd team: 5000 CHF

The 3rd prize is reserved for the top submission from developing or least developed country or small island state as per the UN list (see table C, F, H p.166ff). If such a submissions is already among the top 2, the third submission will get the 3rd prize.

Predictions

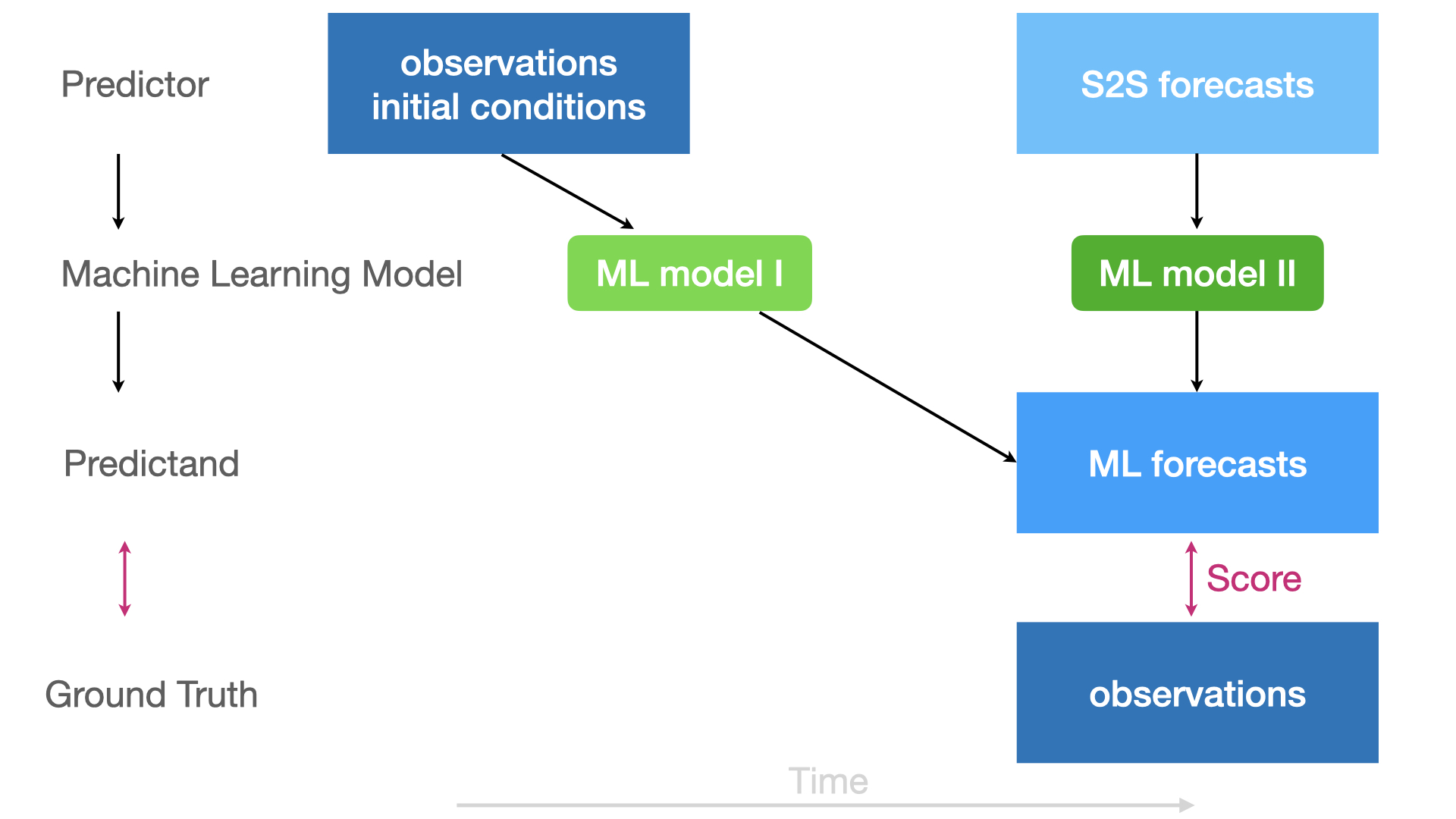

The organizers envisage two different approaches for Machine Learning-based predictions, which may be combined. Predict the week 3-4 & 5-6 state based on:

- I. the initial conditions (observations at

forecast_time) - II. the week 3-4 & 5-6 S2S forecasts

For the exact valid_times to predict, see timings. For the data to use us for training, see data sources. Comply with the rules.

Evaluation

The objective of the competition is to improve week 3-4 (weeks 3 plus 4) and 5-6 (weeks 5 plus 6) subseasonal global probabilistic 2m temperature and total precipitation tercile forecasts issued in the year 2020 by using Machine Learning/Artificial Intelligence.

The evaluation will be continuously performed by a s2saichallengescorer bot, following the verification notebook.

Submissions are evaluated on the Ranked Probability Score (RPS) between the ML-based forecasts and ground truth CPC temperature and accumulated precipitation observations based on pre-computed observations-based terciles calculated in renku_datasets_biweekly.ipynb. This RPS is compared to the climatology forecast in the Ranked Probability Skill Score (RPSS). The ML-based forecasts should beat the re-calibrated real-time 2020 ECMWF and climatology forecasts to be able to win prizes, see end of verification notebook.

RPS is calculated with the open-source package xskillscore over all 2020 forecast_times.

For probabilistic forecasts:

xs.rps(observations, probabilistic_forecasts, category_edges=None, input_distributions='p', dim='forecast_time')

See the xskillscore.rps API for details.

def RPSS(RPS_ML, RPS_clim):

"""Ranked Probability Skill Score with respect to climatology.

+---------+-------------------------------------------+

| Score | Description |

+---------+-------------------------------------------+

| 1 | maximum, perfect improvement |

+---------+-------------------------------------------+

| (0,1] | positive means ML better than climatology |

+---------+-------------------------------------------+

| 0 | equal performance |

+---------+-------------------------------------------+

| (0, -∞) | negative means ML worse than climatology |

+---------+-------------------------------------------+

"""

return 1 - RPS_ML / RPS_clim

The RPS_ML and RPS_clim are first calculated on each grid cell over land globally on a 1.5 degree grid. In grid cells where numerical values are expected but NaNs are provided, the RPS is penalized with 2. The gridded RPSS=1-RPS_ML/RPS_clim is calculated from the ML-based RPS averaged over all forecast_times and the climatological RPS averaged over all forecast_times. The RPSS values are clipped to the interval [-10, 1].

This gridded RPSS is then spatially averaged (weighted (np.cos(np.deg2rad(ds.latitude)))) over [90N-60S] land points and further averaged over both variables and both lead_times. Please note that the observational probabilities are applied with a dry mask on total precipitation tp evaluation as in Vigaud et al. 2017, i.e. we exclude grid cells where the observations-based lower tercile edge is below 1 mm/day. Please find the ground truth compared against here.

For diagnostics, we will further host leaderboards for the two variables in three regions in November 2021:

- Northern extratropics [90N-30N]

- Tropics (29N-29S)

- Southern extratropics [30S-60S]

Please find more details in the verification notebook.

Submissions

We expect submissions to cover all bi-weekly week 3-4 and week 5-6 forecasts issued in 2020, see timings. We expect one submission netcdf file for all 53 forecasts issued on thursdays in 2020. Submissions must be gridded on a global 1.5 degree grid.

Each submission has to be a netcdf file with the following dimension sizes and coordinates:

<xarray.Dataset>

Dimensions: (category: 3, forecast_time: 53, latitude: 121, lead_time: 2, longitude: 240)

Coordinates:

* forecast_time (forecast_time) datetime64[ns] 2020-01-02 ... 2020-12-31

* latitude (latitude) float64 90.0 88.5 87.0 85.5 ... -87.0 -88.5 -90.0

* lead_time (lead_time) timedelta64[ns] 14 days 28 days

* longitude (longitude) float64 0.0 1.5 3.0 4.5 ... 355.5 357.0 358.5

valid_time (lead_time, forecast_time) datetime64[ns] 2020-01-16 ... 2...

* category (category) object 'below normal' 'near normal' 'above normal'

Data variables:

t2m (category, lead_time, forecast_time, latitude, longitude) float32 ...

tp (category, lead_time, forecast_time, latitude, longitude) float32 ...

Attributes:

author: Aaron Spring

author_email: aaron.spring@mpimet.mpg.de

comment: created for the s2s-ai-challenge as a template for the web...

website: https://s2s-ai-challenge.github.io/#evaluation- category: 3

- forecast_time: 53

- latitude: 121

- lead_time: 2

- longitude: 240

- forecast_time(forecast_time)datetime64[ns]2020-01-02 ... 2020-12-31

- long_name :

- initial time of forecast

- standard_name :

- forecast_reference_time

- description :

- The forecast reference time in NWP is the data time, the time of the analysis from which the forecast was made. It is not the time for which the forecast is valid.

array(['2020-01-02T00:00:00.000000000', '2020-01-09T00:00:00.000000000', '2020-01-16T00:00:00.000000000', '2020-01-23T00:00:00.000000000', '2020-01-30T00:00:00.000000000', '2020-02-06T00:00:00.000000000', '2020-02-13T00:00:00.000000000', '2020-02-20T00:00:00.000000000', '2020-02-27T00:00:00.000000000', '2020-03-05T00:00:00.000000000', '2020-03-12T00:00:00.000000000', '2020-03-19T00:00:00.000000000', '2020-03-26T00:00:00.000000000', '2020-04-02T00:00:00.000000000', '2020-04-09T00:00:00.000000000', '2020-04-16T00:00:00.000000000', '2020-04-23T00:00:00.000000000', '2020-04-30T00:00:00.000000000', '2020-05-07T00:00:00.000000000', '2020-05-14T00:00:00.000000000', '2020-05-21T00:00:00.000000000', '2020-05-28T00:00:00.000000000', '2020-06-04T00:00:00.000000000', '2020-06-11T00:00:00.000000000', '2020-06-18T00:00:00.000000000', '2020-06-25T00:00:00.000000000', '2020-07-02T00:00:00.000000000', '2020-07-09T00:00:00.000000000', '2020-07-16T00:00:00.000000000', '2020-07-23T00:00:00.000000000', '2020-07-30T00:00:00.000000000', '2020-08-06T00:00:00.000000000', '2020-08-13T00:00:00.000000000', '2020-08-20T00:00:00.000000000', '2020-08-27T00:00:00.000000000', '2020-09-03T00:00:00.000000000', '2020-09-10T00:00:00.000000000', '2020-09-17T00:00:00.000000000', '2020-09-24T00:00:00.000000000', '2020-10-01T00:00:00.000000000', '2020-10-08T00:00:00.000000000', '2020-10-15T00:00:00.000000000', '2020-10-22T00:00:00.000000000', '2020-10-29T00:00:00.000000000', '2020-11-05T00:00:00.000000000', '2020-11-12T00:00:00.000000000', '2020-11-19T00:00:00.000000000', '2020-11-26T00:00:00.000000000', '2020-12-03T00:00:00.000000000', '2020-12-10T00:00:00.000000000', '2020-12-17T00:00:00.000000000', '2020-12-24T00:00:00.000000000', '2020-12-31T00:00:00.000000000'], dtype='datetime64[ns]') - latitude(latitude)float6490.0 88.5 87.0 ... -88.5 -90.0

- long_name :

- latitude

- standard_name :

- latitude

- stored_direction :

- decreasing

- units :

- degrees_north

array([ 90. , 88.5, 87. , 85.5, 84. , 82.5, 81. , 79.5, 78. , 76.5, 75. , 73.5, 72. , 70.5, 69. , 67.5, 66. , 64.5, 63. , 61.5, 60. , 58.5, 57. , 55.5, 54. , 52.5, 51. , 49.5, 48. , 46.5, 45. , 43.5, 42. , 40.5, 39. , 37.5, 36. , 34.5, 33. , 31.5, 30. , 28.5, 27. , 25.5, 24. , 22.5, 21. , 19.5, 18. , 16.5, 15. , 13.5, 12. , 10.5, 9. , 7.5, 6. , 4.5, 3. , 1.5, 0. , -1.5, -3. , -4.5, -6. , -7.5, -9. , -10.5, -12. , -13.5, -15. , -16.5, -18. , -19.5, -21. , -22.5, -24. , -25.5, -27. , -28.5, -30. , -31.5, -33. , -34.5, -36. , -37.5, -39. , -40.5, -42. , -43.5, -45. , -46.5, -48. , -49.5, -51. , -52.5, -54. , -55.5, -57. , -58.5, -60. , -61.5, -63. , -64.5, -66. , -67.5, -69. , -70.5, -72. , -73.5, -75. , -76.5, -78. , -79.5, -81. , -82.5, -84. , -85.5, -87. , -88.5, -90. ]) - lead_time(lead_time)timedelta64[ns]14 days 28 days

- standard_name :

- forecast_period

- description :

- Forecast period is the time interval between the forecast reference time and the validity time.

- long_name :

- lead time

- aggregate :

- The pd.Timedelta corresponds to the first day of a biweekly aggregate.

- week34_t2m :

- mean[14 days, 27 days]

- week56_t2m :

- mean[28 days, 41 days]

- week34_tp :

- 28 days minus 14 days

- week56_tp :

- 42 days minus 28 days

array([1209600000000000, 2419200000000000], dtype='timedelta64[ns]')

- longitude(longitude)float640.0 1.5 3.0 ... 355.5 357.0 358.5

- long_name :

- longitude

- standard_name :

- longitude

- units :

- degrees_east

array([ 0. , 1.5, 3. , ..., 355.5, 357. , 358.5])

- valid_time(lead_time, forecast_time)datetime64[ns]2020-01-16 ... 2021-01-28

- long_name :

- validity time

- description :

- time for which the forecast is valid

- calculate :

- forecast_time + lead_time

- standard_name :

- time

array([['2020-01-16T00:00:00.000000000', '2020-01-23T00:00:00.000000000', '2020-01-30T00:00:00.000000000', '2020-02-06T00:00:00.000000000', '2020-02-13T00:00:00.000000000', '2020-02-20T00:00:00.000000000', '2020-02-27T00:00:00.000000000', '2020-03-05T00:00:00.000000000', '2020-03-12T00:00:00.000000000', '2020-03-19T00:00:00.000000000', '2020-03-26T00:00:00.000000000', '2020-04-02T00:00:00.000000000', '2020-04-09T00:00:00.000000000', '2020-04-16T00:00:00.000000000', '2020-04-23T00:00:00.000000000', '2020-04-30T00:00:00.000000000', '2020-05-07T00:00:00.000000000', '2020-05-14T00:00:00.000000000', '2020-05-21T00:00:00.000000000', '2020-05-28T00:00:00.000000000', '2020-06-04T00:00:00.000000000', '2020-06-11T00:00:00.000000000', '2020-06-18T00:00:00.000000000', '2020-06-25T00:00:00.000000000', '2020-07-02T00:00:00.000000000', '2020-07-09T00:00:00.000000000', '2020-07-16T00:00:00.000000000', '2020-07-23T00:00:00.000000000', '2020-07-30T00:00:00.000000000', '2020-08-06T00:00:00.000000000', '2020-08-13T00:00:00.000000000', '2020-08-20T00:00:00.000000000', '2020-08-27T00:00:00.000000000', '2020-09-03T00:00:00.000000000', '2020-09-10T00:00:00.000000000', '2020-09-17T00:00:00.000000000', '2020-09-24T00:00:00.000000000', '2020-10-01T00:00:00.000000000', '2020-10-08T00:00:00.000000000', '2020-10-15T00:00:00.000000000', ... '2020-05-07T00:00:00.000000000', '2020-05-14T00:00:00.000000000', '2020-05-21T00:00:00.000000000', '2020-05-28T00:00:00.000000000', '2020-06-04T00:00:00.000000000', '2020-06-11T00:00:00.000000000', '2020-06-18T00:00:00.000000000', '2020-06-25T00:00:00.000000000', '2020-07-02T00:00:00.000000000', '2020-07-09T00:00:00.000000000', '2020-07-16T00:00:00.000000000', '2020-07-23T00:00:00.000000000', '2020-07-30T00:00:00.000000000', '2020-08-06T00:00:00.000000000', '2020-08-13T00:00:00.000000000', '2020-08-20T00:00:00.000000000', '2020-08-27T00:00:00.000000000', '2020-09-03T00:00:00.000000000', '2020-09-10T00:00:00.000000000', '2020-09-17T00:00:00.000000000', '2020-09-24T00:00:00.000000000', '2020-10-01T00:00:00.000000000', '2020-10-08T00:00:00.000000000', '2020-10-15T00:00:00.000000000', '2020-10-22T00:00:00.000000000', '2020-10-29T00:00:00.000000000', '2020-11-05T00:00:00.000000000', '2020-11-12T00:00:00.000000000', '2020-11-19T00:00:00.000000000', '2020-11-26T00:00:00.000000000', '2020-12-03T00:00:00.000000000', '2020-12-10T00:00:00.000000000', '2020-12-17T00:00:00.000000000', '2020-12-24T00:00:00.000000000', '2020-12-31T00:00:00.000000000', '2021-01-07T00:00:00.000000000', '2021-01-14T00:00:00.000000000', '2021-01-21T00:00:00.000000000', '2021-01-28T00:00:00.000000000']], dtype='datetime64[ns]') - category(category)object'below normal' ... 'above normal'

- long_name :

- tercile category probabilities

- units :

- 1

- description :

- Probabilities for three tercile categories. All three tercile category probabilities must add up to 1.

array(['below normal', 'near normal', 'above normal'], dtype=object)

- t2m(category, lead_time, forecast_time, latitude, longitude)float32...

- long_name :

- Probability of 2m temperature in tercile categories

- units :

- 1

- comment :

- All three tercile category probabilities must add up to 1.

- aggregate_week34 :

- mean[14 days, 27 days]

- aggregate_week56 :

- mean[28 days, 41 days]

- variable_before_categorization :

- https://confluence.ecmwf.int/display/S2S/S2S+Surface+Air+Temperature

[9234720 values with dtype=float32]

- tp(category, lead_time, forecast_time, latitude, longitude)float32...

- long_name :

- Probability of total precipitation in tercile categories

- units :

- 1

- comment :

- All three tercile category probabilities must add up to 1.

- aggregate_week34 :

- 28 days minus 14 days

- aggregate_week56 :

- 42 days minus 28 days

- variable_before_categorization :

- https://confluence.ecmwf.int/display/S2S/S2S+Total+Precipitation

[9234720 values with dtype=float32]

- author :

- Aaron Spring

- author_email :

- aaron.spring@mpimet.mpg.de

- comment :

- created for the s2s-ai-challenge as a template for the website

- website :

- https://s2s-ai-challenge.github.io/#evaluation

This template submissions file is available here.

Click on 📄 to see the metadata for the coordinates and variables.

We deal with two fundamentally different variables here:

Total precipitation is precipitation flux pr accumulated over lead_time until valid_time and therefore describes a point observation.

2m temperature is averaged over lead_time(valid_time) and therefore describes an average observation.

The submission file data model unifies both approaches and assigns 14 days for week 3-4 and 28 days for week 5-6 marking the first day of the biweekly aggregate.

Submissions have to be commited in git with git lfs in a repository hosted by renkulab.io.

After the competition, the code for training together with the gridded results must be made public, so the organizers and peer review can check adherence to the rules. Please indicate the resources used (number of CPUs/GPUs, memory, platform; see safeguards in examples) in your scripts/notebooks to allow reproducibility and document them fully to enable easy interpretation of the codes. Submissions, which cannot be independently reproduced by the organizers after the competition ends, cannot win prizes, please see rules.

Data

Timings

The organizers explicitly choose to run this competition on past 2020 data, instead of a real-time competition to enable a much shorter competition period and to keep momentum high. We are aware of the dangers of overfitting (see rules), if the ground truth data is accessible.

Please find here an explicit list of the forecast dates required.

1) Which forecast starts/target periods (weeks 3-4 & 5-6) to require to be submitted?

- 53 forecasts issued on Thursdays in 2020 (since there are available from all S2S models, including our ECMWF benchmark)

- In that case, the first forecast is issued S=2 Jan 2020, for the week 3-4 target 16-29 Jan.

Please find a list of the dates when forecasts are issued (forecast_time with CF standard_name forecast_reference_time) and corresponding start and end in valid_time for week 3-4 and week 5-6.

| lead_time | week 3-4 start | week 3-4 end | week 5-6 start | week 5-6 end | |

|---|---|---|---|---|---|

| forecast_reference_time | |||||

| valid_time | 2020-01-02 | 2020-01-16 | 2020-01-29 | 2020-01-30 | 2020-02-12 |

| 2020-01-09 | 2020-01-23 | 2020-02-05 | 2020-02-06 | 2020-02-19 | |

| 2020-01-16 | 2020-01-30 | 2020-02-12 | 2020-02-13 | 2020-02-26 | |

| 2020-01-23 | 2020-02-06 | 2020-02-19 | 2020-02-20 | 2020-03-04 | |

| 2020-01-30 | 2020-02-13 | 2020-02-26 | 2020-02-27 | 2020-03-11 | |

| 2020-02-06 | 2020-02-20 | 2020-03-04 | 2020-03-05 | 2020-03-18 | |

| 2020-02-13 | 2020-02-27 | 2020-03-11 | 2020-03-12 | 2020-03-25 | |

| 2020-02-20 | 2020-03-05 | 2020-03-18 | 2020-03-19 | 2020-04-01 | |

| 2020-02-27 | 2020-03-12 | 2020-03-25 | 2020-03-26 | 2020-04-08 | |

| 2020-03-05 | 2020-03-19 | 2020-04-01 | 2020-04-02 | 2020-04-15 | |

| 2020-03-12 | 2020-03-26 | 2020-04-08 | 2020-04-09 | 2020-04-22 | |

| 2020-03-19 | 2020-04-02 | 2020-04-15 | 2020-04-16 | 2020-04-29 | |

| 2020-03-26 | 2020-04-09 | 2020-04-22 | 2020-04-23 | 2020-05-06 | |

| 2020-04-02 | 2020-04-16 | 2020-04-29 | 2020-04-30 | 2020-05-13 | |

| 2020-04-09 | 2020-04-23 | 2020-05-06 | 2020-05-07 | 2020-05-20 | |

| 2020-04-16 | 2020-04-30 | 2020-05-13 | 2020-05-14 | 2020-05-27 | |

| 2020-04-23 | 2020-05-07 | 2020-05-20 | 2020-05-21 | 2020-06-03 | |

| 2020-04-30 | 2020-05-14 | 2020-05-27 | 2020-05-28 | 2020-06-10 | |

| 2020-05-07 | 2020-05-21 | 2020-06-03 | 2020-06-04 | 2020-06-17 | |

| 2020-05-14 | 2020-05-28 | 2020-06-10 | 2020-06-11 | 2020-06-24 | |

| 2020-05-21 | 2020-06-04 | 2020-06-17 | 2020-06-18 | 2020-07-01 | |

| 2020-05-28 | 2020-06-11 | 2020-06-24 | 2020-06-25 | 2020-07-08 | |

| 2020-06-04 | 2020-06-18 | 2020-07-01 | 2020-07-02 | 2020-07-15 | |

| 2020-06-11 | 2020-06-25 | 2020-07-08 | 2020-07-09 | 2020-07-22 | |

| 2020-06-18 | 2020-07-02 | 2020-07-15 | 2020-07-16 | 2020-07-29 | |

| 2020-06-25 | 2020-07-09 | 2020-07-22 | 2020-07-23 | 2020-08-05 | |

| 2020-07-02 | 2020-07-16 | 2020-07-29 | 2020-07-30 | 2020-08-12 | |

| 2020-07-09 | 2020-07-23 | 2020-08-05 | 2020-08-06 | 2020-08-19 | |

| 2020-07-16 | 2020-07-30 | 2020-08-12 | 2020-08-13 | 2020-08-26 | |

| 2020-07-23 | 2020-08-06 | 2020-08-19 | 2020-08-20 | 2020-09-02 | |

| 2020-07-30 | 2020-08-13 | 2020-08-26 | 2020-08-27 | 2020-09-09 | |

| 2020-08-06 | 2020-08-20 | 2020-09-02 | 2020-09-03 | 2020-09-16 | |

| 2020-08-13 | 2020-08-27 | 2020-09-09 | 2020-09-10 | 2020-09-23 | |

| 2020-08-20 | 2020-09-03 | 2020-09-16 | 2020-09-17 | 2020-09-30 | |

| 2020-08-27 | 2020-09-10 | 2020-09-23 | 2020-09-24 | 2020-10-07 | |

| 2020-09-03 | 2020-09-17 | 2020-09-30 | 2020-10-01 | 2020-10-14 | |

| 2020-09-10 | 2020-09-24 | 2020-10-07 | 2020-10-08 | 2020-10-21 | |

| 2020-09-17 | 2020-10-01 | 2020-10-14 | 2020-10-15 | 2020-10-28 | |

| 2020-09-24 | 2020-10-08 | 2020-10-21 | 2020-10-22 | 2020-11-04 | |

| 2020-10-01 | 2020-10-15 | 2020-10-28 | 2020-10-29 | 2020-11-11 | |

| 2020-10-08 | 2020-10-22 | 2020-11-04 | 2020-11-05 | 2020-11-18 | |

| 2020-10-15 | 2020-10-29 | 2020-11-11 | 2020-11-12 | 2020-11-25 | |

| 2020-10-22 | 2020-11-05 | 2020-11-18 | 2020-11-19 | 2020-12-02 | |

| 2020-10-29 | 2020-11-12 | 2020-11-25 | 2020-11-26 | 2020-12-09 | |

| 2020-11-05 | 2020-11-19 | 2020-12-02 | 2020-12-03 | 2020-12-16 | |

| 2020-11-12 | 2020-11-26 | 2020-12-09 | 2020-12-10 | 2020-12-23 | |

| 2020-11-19 | 2020-12-03 | 2020-12-16 | 2020-12-17 | 2020-12-30 | |

| 2020-11-26 | 2020-12-10 | 2020-12-23 | 2020-12-24 | 2021-01-06 | |

| 2020-12-03 | 2020-12-17 | 2020-12-30 | 2020-12-31 | 2021-01-13 | |

| 2020-12-10 | 2020-12-24 | 2021-01-06 | 2021-01-07 | 2021-01-20 | |

| 2020-12-17 | 2020-12-31 | 2021-01-13 | 2021-01-14 | 2021-01-27 | |

| 2020-12-24 | 2021-01-07 | 2021-01-20 | 2021-01-21 | 2021-02-03 | |

| 2020-12-31 | 2021-01-14 | 2021-01-27 | 2021-01-28 | 2021-02-10 |

2) Which data to “allow” to be used to make a specific ML forecast?

- for the first forecast issued S=2 Jan 2020, any observational data up to the day of the forecast start (

forecast_time), i.e. 2 Jan 2020 - any S2S forecast initialized up to and including S=2 Jan 2020

Sources

Main datasets for this competition are already available as renku datasets and in climetlab for both variables temperature and total precipitation. In climetlab, we have one dataset lab for the Machine Learning community and S2S forecasting community, which both lead to the same datasets:

tag in climetlab (ML community) |

tag in climetlab (S2S community) |

Description | renku dataset(s) |

|---|---|---|---|

training-input |

hindcast-input |

deterministic daily lead_time reforecasts/hindcasts initialized once per week 2000 to 2019 on dates of 2020 thursdays forecasts from models ECMWF, ECCC, NCEP |

biweekly lead_time: {model}_hindcast-input_2000-2019_biweekly_deterministic.zarr |

test-input |

forecast-input |

deterministic daily lead_time real-time forecasts initialized on thursdays 2020 from models ECMWF, ECCC, NCEP |

biweekly lead_time: {model}_forecast-input_2020_biweekly_deterministic.zarr |

training-output-reference |

hindcast-like-observations |

CPC daily observations formatted as 2000-2019 hindcasts with forecast_time and lead_time |

biweekly lead_time deterministic: hindcast-like-observations_2000-2019_biweekly_deterministic.zarr; probabilistic in 3 categories: hindcast-like-observations_2000-2019_biweekly_terciled.zarr |

test-output-reference |

forecast-like-observations |

CPC daily observations formatted as 2020 forecasts with forecast_time and lead_time |

biweekly lead_time: forecast-like-observations_2020_biweekly_deterministic.zarr; binary in 3 categories: forecast-like-observations_2020_biweekly_terciled.nc |

training-output-benchmark |

hindcast-benchmark |

ECMWF week 3+4 & 5+6 re-calibrated probabilistic 2000-2019 hindcasts in 3 categories | - |

test-output-benchmark |

forecast-benchmark |

ECMWF week 3+4 & 5+6 re-calibrated probabilistic real-time 2020 forecasts in 3 categories | ecmwf_recalibrated_benchmark_2020_biweekly_terciled.nc |

| - | - | Observations-based tercile category edges calculated from 2000-2019 | hindcast-like-observations_2000-2019_biweekly_tercile-edges.nc |

Note that tercile_edges separating observations into the category "below normal" [0.-0.33), "near normal" [0.33-0.67) or "above normal" [0.67-1.] depend on longitude (240), latitude (121), lead_time (46 days or 2 bi-weekly), forecast_time.weekofyear (53) and category_edge (2).

We encourage to use subseasonal forecasts from the S2S and SubX projects:

- S2S Project

- climetlab for models ECMWF, ECCC and NCEP from the European Weather Cloud

- IRI Data Library (IRIDL) for all S2S models via opendap

- s2sprediction.net for data access from ECMWF and CMA

- SubX Project

However, any other publicly available data sources (like CMIP, NMME, DCPP etc.) of dates prior to the forecast_time can be used for training-input and forecast-input.

Also purely empirical methods like persistence or climatology could be used. The only essential data requirement concerns forecast times and dates, see timings.

Ground truth sources are NOAA CPC temperature and total precipitation from IRIDL:

pr: precipitation rate to accumulate totpt2m: 2m temperature

Examples

Join

Follow the steps in the template renku project.

Training

Where to train?

- renkulab.io provides free but limited compute resources. You may use upto 2 CPUs, 8 GB memory and 10 GB disk space.

- As renku projects are

gitrepositories under the hood, you canrenku cloneorgit cloneyour project onto your own laptop or supercomputer account for the heavy lifting. - ECMWF may provide limited compute nodes on the European Weather Cloud

EWC(where large parts of the data is stored) upon request. This opportunity is specifically targeted for participants from developing or least developed country or small island states and/or without institutional computing resources. Please indicate why you need compute access and cannot train your model elsewhere in the registration form. To be considered for such computational resources at EWC, you need to register by June 15th 2021. Please note that we cannot make promises about these resources given the unknown demand.

We are looking for your smart solutions here. Find a quick start template here.

Discussion

Please use the issue tracker in the renkulab s2s-ai-challenge gitlab repository for

questions to the organizers, discussions,

bug reports.

We have set up a for informal communication.

FAQ

Answered questions from the issue tracker are regularly transferred to the FAQ.

Leaderboard

RPSS

Please find below all submissions with an RPSS > -1:

| Project | User | Submission tag | RPSS | Visibility | Notebooks | Review | Prediction netcdf | Docs | Description | Country | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | s2s-ai-challenge-template | Bertrand Denis, David Landry, Jordan Gierschendorf, Arlan Dirkson | submission-methods-7 | 0.0459475 |  |

|

|

|

|

climatology, EMOS, CNN ECMWF and CNN weighting | 🇨🇦 |

| 2 | s2s-ai-challenge-BSC | Sergi Bech, Llorenç Lledó, Lluís Palma, Carlos Gomez, Andrea Manrique | submission-ML_models | 0.0288718 |  |

|

|

|

|

climo + raw ECMWF + logistic regression + random forest | 🇪🇸 |

| 3 | s2s-ai-challenge-uconn | Shanglin Zhou, Adam Bienkowski | submission-tmp3-0.0.1 | 0.00620681 |  |

|

|

|

|

RandomForestClassifier | 🇺🇸 |

| 4 | s2s-ai-challenge-kit-eth-ubern | Nina Horat, Sebastian Lerch, Julian Quinting, Daniel Steinfeld, Pavel Zwerschke | submission_local_CNN_final | 0.00236371 |  |

|

|

|

|

CNN | 🇩🇪🇨🇭🇺🇸 |

| 5 | s2s-ai-challenge-template | Damien Specq | submission-damien-specq | 0.000467425 |  |

|

|

|

|

Bayesian statistical-dynamical post-processing | 🇫🇷 |

| 6 | s2s-ai-challenge-ECMWF-internal-testers | Matthew Chantry, Florian Pinault, Jesper Dramsch, Mihai Alexe | submission-pplnn-0.0.1 | -0.0301027 |  |

|

|

|

CNN, resnet-CNN or Unet | 🇬🇧🇫🇷🇩🇪 | |

| 7 | s2s-ai-challenge-alpin | Julius Polz, Christian Chwala, Tanja Portele, Christof Lorenz, Brian Boeker | submission-resubmit-alpine-0.0.1 | -0.597942 |  |

|

|

|

CNN | 🇩🇪 | |

| 8 | s2s-ai-challenge-ylabaiers | Ryo Kaneko, Kei Yoshimura, Gen Hayakawa, Gaohong Yin, Wenchao MA, Kinya Toride | submission_last | -0.756598 |  |

|

|

|

Unet | 🇯🇵🇺🇸 | |

| 9 | s2s-ai-challenge-kjhall01 | Kyle Hall & Nachiketa Acharya | submission-kyle_nachi_poelm-0.0.6 | -0.9918 |  |

|

|

|

Probabilistic Output Extreme Learning Machine (POELM) | 🇺🇸 |

Submissions have to beat the ECMWF re-calibrated benchmark and climatology while following the rules to qualify for prizes.

We will also publish RPSS subleaderboards, that are purely diagnostic and show RPSS for two variables (t2m, tp), two lead_times (weeks 3-4 & 5-6) and three subregions ([90N-30N], (30N-30S), [30S-60S]).

Peer review

From November 2021 to January 2022, there will be two peer review processes:

- open peer review for all submissions

- expert peer-reviews for the top ranked submissions

Peer review will evaluate:

- whether the method is well explained

- whether the code is written in a clean and understandable way

- whether the code gives reproducible results by an independent person

- the originality of the method

- whether the safeguards against overfitting and reproducibility have been followed and overfitting has been avoided

Open peer review

One goal of this challenge is to foster a conversation about how AI/ML can improve S2S forecasts. Therefore, we will open the floor for discussions and evaluating all methods submitted in an open peer review process. The organizers will create a table of all submissions and everyone is invited to comment on submissions, like in the EGU’s public interactive discussions. This open peer review will be hosted on renku’s gitlab.

Expert peer review

The organizers decided that the top four submissions will be evaluated by expert peer review. This will include 2-3 reviews by experts from the fields of S2S & AI/ML. Additionally, the organizers will host a public showcase session in January 2022, in which these top four submission can present their method in 10 minutes followed by 15 minutes Q&A. The reviewers will give their review grades after an internal discussion moderated by Andrew Robertson and Frederic Vitart acting as editors.

Based on the criteria above, the expert peer reviewers will give a peer review grade. Comments from the open peer review can be taken into account by the expert peer review grades.

Final

The review grades will be ranked. The RPSS leaderboard will also be ranked. The final leaderboard will be determined from the average of both rankings. When two submissions have the same mean ranking, the review ranking counts more. The top three submissions based on the combined RPSS and expert peer-review score will receive prizes.

Rules

- One team can only get one prize. Teams with with overlapping members must use considerably different methods to be considered for prizes both. One person can only join three teams at maximum.

- Prizes are only issued if the method beats the re-calibrated ECMWF benchmark and climatology.

- To be eligible for the third prize reserved for submissions from developing or least developed country or small island states, all team members must be resident in such countries.

- Model training is not allowed to use the ground truth/observations data after forecast was issued, see Data Timings.

- Do not overfit, a credible model is one that continues to perform similarly on new unseen data.

- Also the RPSS score (gridded not required) over the training period (e.g. 2000-2019 or what is available for your inputs) must be provided in the submissions.

- The codes used must be fully documented, with details of the safeguards enacted to prevent overfitting, see checked safeguards in template.

- The RPSS leaderboard will be made public in early November 2021, once all submissions are public.

- The submitted codes and gridded results to all submissions must be made public on November 5th 2021 to be accessible for open peer review, and for expert peer review of the top submissions, which will last until January 2022. Submissions, which are not made public on November 1st 2021, will be removed from the leaderboard.

- The decision about the review grade is final and there will be no correspondence about the review.

- The organizers reserve the right to disqualify submissions if overfitting is suspected.

- Prizes will be issued in early February 2022.

Organizers

- WMO/WWRP: Estelle De Coning, Wenchao Cao

- WCRP: Nico Caltabiano, Michel Rixen

- S2S Project: Frederic Vitart, Andy Robertson

- ECMWF: Florian Pinault, Baudouin Raoult

- SDSC: Rok Roskar

- WMO contractor/main contact: Aaron Spring @aaronspring @realaaronspring

![]()

![]()

![]()

![]()

![]()

![]()